Organizations evolve with models, goals, and baselines enabled by quality data. Data enables organizations to measure and set goals so they can choose starting points, identify relevant comparisons, and determine the desired outcomes of their efforts.

In short, data is analytics. Organizations use databases to store and retrieve information that later becomes actionable information. But what exactly is a database?Is it a magical place where dates live? Not so.

But here’s a more formal definition: A database is a collection of data that has a predetermined structure and is stored on a computer for easy access. In most cases, the database management system is responsible for monitoring the functioning of the database. Database refers to both a data management system and a database “DBMS” and related applications.

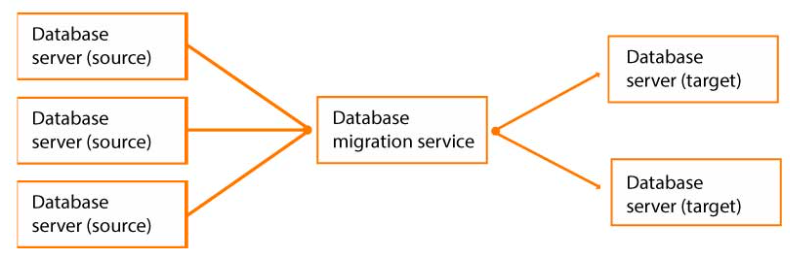

An organization may move away from its existing database over time to save money, improve reliability, increase business scalability, or some other goal. Database migration is the process of moving data from one or more source databases to one or more target databases, which is the topic of this article. After the migration is complete, all data from the source databases will be reflected in the target databases, even if the structure has changed. Clients that were previously using the source databases (e.g. web applications or BI tools) switch to the target databases, then the source databases are closed.

While database migrations are extremely important, data migration projects can be very complex.Data migration requires downtime, which can disrupt data management operations. That’s why it’s important to understand the risks and best practices of database migration, as well as the tools to streamline the process.

AWS Database Migration Service is a great tool for running migration scenarios to migrate data from different sources to one database on AWS. Luneba Solutions has extensive and unique migration proposals for AWS Marketplace as databases are an integral part of software applications and systems; Each of our migration proposals is customized to the needs of the client.

Next, we’ll cover all the basics of database migration to the cloud to arm you with the best knowledge as you prepare for this important step for your databases.

What is a Database Cloud Migration?

The database migration process includes the following steps:

- Identify the source and target databases.

- Define rules for schema conversion and data mapping. These depend on business needs and/or specific database constraints. This can include adding or removing tables and columns, deleting items, splitting fields, or changing types and constraints.

- Follow the actual process of copying the data to the new location.

- (Optional) Configure the continuous replication process.

- Upgrade clients to use the new DB instance.

It is important to understand that database (data) migration and schema migration are two different processes.

The purpose of database schema migration is to update the existing database structure to meet the new requirements. Schema migrations are a programmatic way to handle small, often reversible, changes to data structures.The goal of schema migration software is to make it easier to test, release, and iterate on database changes without data loss. Most migration programs create artifacts that contain the exact operations that must be performed to change the state of a database from a known state to a new one. Standard version control software can be used to review these changes, track the changes, and share them with other team members. It usually includes only one database.

Conversely, database migration involves copying both the database schema and the data it contains, as well as all other database components such as views, stored procedures, etc.In most cases, data is copied from one database to another, but sometimes more complex scenarios are required.

Here is an example of what a more complex database migration looks like.

Although data migration is a fairly standard process, it comes with many challenges and risks. To name a few, the two databases can be incompatible for some reason, which requires additional effort. The migration process usually takes a long time.Constant monitoring is required to ensure that no data is lost in the process. When migrating data between two remote locations, you need to establish a secure network connection.

Now that you have a clearer idea of what database migrations to the cloud are all about, let’s look at some of the most common reasons for moving a database.

Why Database Migrations matter

Below is a list of the most common reasons for database migration:

- to save money, e.g. by switching to a license-free database

- to move data from an old system to newer software.

- to move off of commercial databases (Oracle, MS SQL, etc.) to the open source ones (Postgres, MySQL, etc.)

- to introduce a db replica for reliability – kind of a “living” backup (DMS also supports continuous data replication for such scenarios)

- to create a copy of DB instances for different stages, for example for development or testing environments.

- to unify disparate data, so it is accessible by different techniques.

- to upgrade to the latest version of the database software to strengthen safety protocols and governance.

- to improve performance and achieve scalability.

- to move from an on-premise database to a cloud-based database.

- to merge data from numerous sources into a centralized database

And as with anything, there are also challenges to consider:

- Data Loss: Data loss is the most common problem companies face during DB migration. During the planning phase, it’s important to test for data loss and data corruption to see if all of the data was transferred correctly.

- Data Security: The most valuable thing a business has is its data. So, keeping it safe is of the utmost importance. Data encryption should be the most important thing to do before the DB migration process.

- Planning challenges: Large businesses often have different databases in different parts of the business. When planning a database migration, it can be hard to find these databases and figure out how to convert all schemas and normalize data.

- Migration strategy challenges: Database migration isn’t something that is done often, for this reason companies struggle to come up with an optimal migration strategy for their specific needs. Hence, it is important to do a lot of research before DB migration.

Cloud migration strategies typically involve seven Rs. Now you’re probably wondering what R has to do with cloud migration, but don’t worry, we’ve got you covered!

In the context of database migration to the cloud, the seven Rs represent different ways to move to or into the cloud. This includes scenarios where a company decides to move its on-premises ERP application to the AWS Cloud for a business unit, or to extend or modify existing AWS Cloud solutions to add new functionality. Each application in this line of business falls into one of the following categories.

- Retire. Retiring the database from the local environment which includes many parts like DNS entry, removing load balancing rules, disabling the database itself etc.

- Retain. Retaining some databases that are still used locally by organizations when migrating them would cause more problems than benefits. As other parts of your infrastructure migrate to the cloud, it may be necessary to establish a high-speed connection between your organization’s infrastructure and the cloud.

- Relocate. Relocate our database servers from on-premises instances to virtual instances in the cloud without upgrades.

- Rehost. Referred to as “lift and shift” by many, in the case of databases, this means migrating from on-premises data to the cloud.This option is typically preferred by companies looking to scale quickly to meet today’s business needs. It allows for some additional changes, such as B. Changing the database provider. For example, one rehosting strategy is to move from an on-premises database to a database hosted on an EC2 instance (VM) in the cloud. It is also possible to move to a cloud-managed database in the SaaS model.

- Repurchase. Also known as “drop and shop,” this is the decision to upgrade to a new or different version of the database server.This option is preferred by organizations looking to change their current licensing model.

- Replatform. The platform change strategy does not change the underlying database architecture but moves it to a new cloud-native database service with the same database engine or a compatible database engine. This means that you can move your databases to the cloud without major data and structure changes while still enjoying the benefits of the cloud.

- Refactor. There is no database refactoring per se. However, it can be very beneficial to make major changes to the application and then migrate the database. Refactoring your application to use a different database type, e.g. The use of, for example, NoSQL DynamoDB instead of the standard MySQL relational database, will in many cases reduce the cost of the entire application and/or increase its performance.

What is AWS DMS?

One of the most popular migration toolkits in the AWS ecosystem is AWS Data Migration. It’s a great tool for moving your data to the cloud as it allows you to store data in a hybrid cloud, transfer data online or offline, or both. It also offers a range of services to help you move your records of any kind.

AWS Database Migration Services (DMS) pricing starts low. Pay only for the time actually used. You can also use the DMS for free for six months if you transfer your data to any AWS-based database. Click on this link for more information.

Pros:

- It helps a lot to cut down on application downtime.

- It supports both homogeneous (same DBMS as source and target) and heterogeneous (different DBMS) migrations.

- Due to its high availability, it can be used for continuous data migration.

- Supervision of the migration process and automatic remediation.

- Built-in security for data both when it is at rest and when it is being moved.

- Built-in integration with AWS services.

Cons:

- Only copies a small number of DDL (data definition language).

- Does not support some network configurations.

- Does not support any database version to any database version migrations.

- Requires periodical monitoring.

- As most database migration tools, may cause the system to slow down when a lot of data is being used.

As you can see, AWS DMS allows you to perform one-time migrations and replicate ongoing changes to ensure your sources and targets are always in sync. You can use the AWS Schema Conversion Tool (AWS SCT) to convert your database schema to a new platform if you want to switch to a different database engine. Then transfer the data using AWS DMS. Because AWS DMS is part of the AWS Cloud, you get the convenience, speed to market, security, and flexibility that AWS services provide.

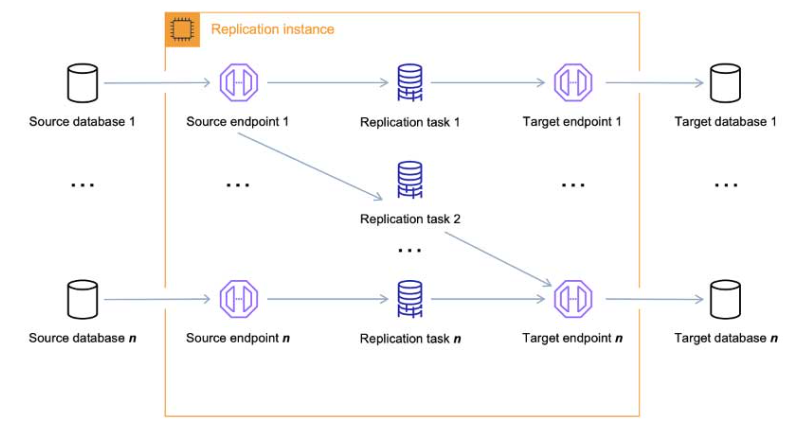

AWS DMS is basically a server in the AWS Cloud that runs replication software. You can create secure source and target connections to tell AWS DMS where data came from and where to store it. You then instruct that server to move your data by setting a task to run on it. If the tables and their primary keys don’t already exist in the target, AWS DMS creates them. If you wish, you can create the target tables yourself in advance. Or you can use the AWS Schema Conversion Tool (AWS SCT) to create some or all of your target tables, indexes, views, triggers, and so on.

How to migrate a Database to AWS?

AWS DMS helps you skip the tedious parts of the migration. Traditionally, you had to perform performance analysis, purchase hardware and software, install and maintain systems, test and repair your installation, and then start all over again. AWS DMS automatically configures, manages, and monitors all hardware and software required for the migration. Once you start the AWS DMS setup process, the migration can begin.

AWS DMS allows you to change the number of migration resources you use based on your activity. For example, if you decide you need more disk space, you can simply add more disk space and start the migration again, which typically only takes a few minutes.

AWS DMS uses a pay-as-you-go model. You only pay for AWS DMS resources when you use them. This differs from traditional licensing models where you pay upfront to purchase a license and then pay for the upgrade.

Let’s review the key considerations when using AWS DMS to migrate databases to the cloud.

Components of AWS DMS

There are several internal AWS DMS components that help make a solution a success. Together, these elements will help you migrate your data, and the better you understand each one, the better equipped you’ll be to provide deeper insights into your troubleshooting activities.

There are three key AWS DMS components.

Replication instance

In general, an AWS DMS replication instance is simply an Amazon Elastic Compute Cloud (Amazon EC2) managed instance that manages one or more replication jobs. A single replication instance can host one or more replication jobs, depending on the progress of the migration and the available space on the replica. You can choose the best configuration for your use case from the various replication instances provided by AWS DMS.

AWS DMS sets up the replication instance on the Amazon EC2 instance. Some of the smaller instance classes are enough to test the service or do small migrations. If you need to move multiple tables or run multiple replication tasks at the same time, you can use one of the larger instances. This is the best method because AWS DMS can consume a lot of memory and CPU.

Source and target endpoints

AWS DMS uses an endpoint to access the source or target data store.Depending on the data store, the connection information will vary, but typically you provide the following information when creating an endpoint.

- Endpoint type. Source or target.

- Engine type. Type of database engine.

- Hostname. Server name or IP address that AWS DMS can reach.

- Port. Port number used for database server connections.

- Encryption. Secure Socket Layer (SSL) mode, if SSL is used to encrypt the connection.

- Credentials. Username and password with required access rights.

When you use the AWS DMS console to set up your endpoint, the console prompts you to test your connection to the endpoint. Before an endpoint can be used in a DMS task, it must pass the test. Like the login data, the test criteria are also different for each engine type. Typically, AWS DMS verifies that the database with the specified server name and port exists and that the credentials you provide can be used to connect to the database with the required permissions to perform the migration. If the connection test succeeds, AWS DMS retrieves and stores schema information for later use when configuring tasks.

One endpoint can be used by multiple replication tasks. For example, you can move two applications that are logically different but hosted in the same source database. In this case, you run two replication jobs, one for each set of tables in the application. You can perform both tasks using the same AWS DMS endpoint.

Replication task

You can move a dataset from a source endpoint to a target endpoint using an AWS DMS replication job. The last thing you need to do before you start the migration is set up a replication job.

When you make a replication task, you choose the following settings for it.

- Replication instance: The instance that will host the task and run it.

- Source endpoint.

- Target endpoint.

- Types of migration.

- Full load (Migrating existing data).

- Full load + CDC (Migrate existing data and replicate changes as they happen).

- Change data capture (CDC) only, that is: replicate only ongoing data changes.

- Options for target table preparation mode.

- Do nothing. AWS DMS will assume that the tables on the target have already been made.

- Drop tables on target. AWS DMS deletes the target tables and makes new ones.

- Trim. If you made tables on the target, AWS DMS will delete them before the migration begins. If you choose this option and there are no tables, AWS DMS makes them.

- Large Binary Objects (LOB) mode.

- Don’t include LOB columns. LOB columns are left out of the migration.

- Full LOB mode: Move whole LOBs, no matter how big they are.

- Limited LOB mode: LOBs will be cut off at the size set by the Max LOB Size parameter.

- Table mappings show which tables need to be moved and how they are moved.

- Data transformation.

- Schema, table, and column names are being changed.

- Changing tablespace names (for Oracle target endpoints).

- Setting up the target’s primary keys and unique indexes.

- Data validation.

- Logging on Amazon CloudWatch.

Note that an AWS Region is required to create an AWS DMS migration project with the required replication instance, endpoints, and tasks.

Targets for AWS DMS

With AWS DMS, you can use the following data stores as endpoints for data movement.

- On-premises and Amazon EC2 instance databases:

- Oracle versions 10g, 11g, 12c, 18c, and 19c for the Enterprise, Standard, Standard One, and Standard Two editions.

- Microsoft SQL Server versions 2005, 2008, 2008R2, 2012, 2014, 2016, 2017, and 2019 for the Enterprise, Standard, Workgroup, and Developer editions. The Web and Express editions are not supported.

- MySQL versions 5.5, 5.6, 5.7, and 8.0.

- MariaDB (supported as a MySQL-compatible data target) versions 10.0.24 to 10.0.28, 10.1, 10.2, 10.3 and 10.4.

- PostgreSQL version 9.4 and later (for versions 9.x), 10.x, 11.x, 12.x, 13.x, and 14.x.

- SAP Adaptive Server Enterprise (ASE) versions 15, 15.5, 15.7, 16 and later.

- Redis versions 6.x.

- Oracle versions 11g (versions 11.2.0.3.v1 and later), 12c, 18c, and 19c for the Enterprise, Standard, Standard One, and Standard Two editions.

- Microsoft SQL Server versions 2012, 2014, 2016, 2017, and 2019 for the Enterprise, Standard, Workgroup, and Developer editions. The Web and Express editions are not supported.

- MySQL versions 5.5, 5.6, 5.7, and 8.0.

- MariaDB (supported as a MySQL-compatible data target) versions 10.0.24 to 10.0.28, 10.1, 10.2,10.3 and 10.4.

- PostgreSQL version 10.x, 11.x, 12.x, 13.x, and 14.x.

- Amazon SaaS relational databases and NoSQL solutions:

- Amazon Aurora MySQL-Compatible Edition

- Amazon Aurora PostgreSQL-Compatible Edition

- Amazon Aurora Serverless v2

- Amazon Redshift

- Amazon S3

- Amazon DynamoDB

- Amazon OpenSearch Service

- Amazon ElastiCache for Redis

- Amazon Kinesis Data Streams

- Amazon DocumentDB (with MongoDB compatibility)

- Amazon Neptune

- Apache Kafka – Amazon Managed Streaming for Apache Kafka (Amazon MSK) and self-managed Apache Kafka

- Babelfish (version 1.2.0) for Aurora PostgreSQL (versions 13.4, 13.5, 13.6)

Migration with AWS DMS – best practices

Here are some of the top tips and tricks for using AWS DMS more efficiently.

Converting schema

If your database migration scenario requires a schema change, we recommend using the AWS Schema Conversion Tool for all basic and advanced migrations. The tool supports all types of conversions, including code conversions that are not supported by the built-in AWS DMS schema conversion engine. AWS SCT converts tables, indexes, views, triggers, and more into the DDL format of your choice.

Putting an idea to the test

After setting up a migration scenario, always test with a smaller subset of data. This will help you to find errors in your approach and estimate the real duration of the migration.

Using your name server onsite

If you are experiencing connectivity issues or slow data transfer times, consider setting up your own on-premises DNS server to troubleshoot your AWS endpoints. The Amazon Elastic Compute Cloud (EC2) DNS resolver is typically used by the AWS “DNS” domain naming system; Replication instances to repair domain endpoints. However, with the Amazon Route 53 resolver, you route traffic to an internal name server. By establishing a secure connection between your server and AWS, you can send and receive requests using this tool’s inbound and outbound endpoints, forwarding rules, and private connection. Using a local name server is more secure and convenient behind a firewall.

Using row filtering to improve performance when migrating large tables

When migrating a large table, it can be helpful to break the process down into smaller, more manageable tasks. Break the migration into manageable chunks using a key or partition key, and then use row filtering to break the tasks down into smaller tasks. If the total primary key ID is between 1 and 8,000,000, you can use row filtering to create eight jobs that download 1,000,000 records each

Removing the load off your source database

Amazon DMS is consuming some backend database resources. For each table processed in parallel, AWS DMS performs a full scan of the source table during a full load. Each migration job you run uses the CDC process to track changes at the source. For example, if you use Oracle and want AWS DMS to perform CDC, you might need to increase the amount of data written to the change log.

Wrapping up

Luneba Solutions is a leading technology company that helps companies improve their performance by creating custom software for them to move their databases. We have a team of trained professionals who can help you migrate your database successfully, with a focus on cloud database migrations and AWS DMS. We can assess your current database to create a move plan and ensure that all appropriate precautions are taken to ensure no data is lost during the database move.